For the most of my professional life, I have ignored the various goings on within the A.I. community. First, as I have previously explained, I didn’t think that computers had the requisite computational power and second, I figured, existing research hadn’t solved the problem, so maybe the current line of thinking might cloud the way to an innovative alternative solution.

Fast forward to today and after I hope an epiphany on the Strong A.I. problem set, I have started to read the various papers of the last forty years or so. First, I am struck by how much early work has been done on such a difficult problem set. Second, that sadly, much of my own thoughts have been researched by lots of different groups in depth. That’s good news because sometimes, individual researchers have spent a lifetime on vary narrow, but important aspects of the problem set, but it does beg the question, why hasn’t anyone put all the various research together to build Strong A.I.?

Here is my thoughts on the subject. Over the last couple of days in particular, I have been reading Speech and Language Processing, by Jurafsky and Martin. I have almost finished reading the textbook and watching their videos online from Stanford. As I mentioned, I have been fortified by the approaches discussed in the book, because I have come to similar approaches independently.

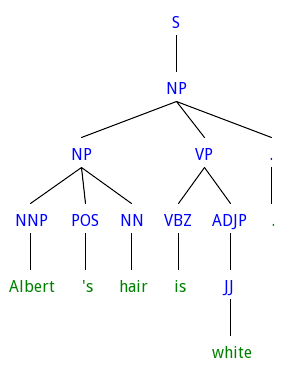

I have a pretty good idea on how to build Symantec Analysis step in the parser and generate First Order Predicate Logic and the resulting inference engine (a future post). But how to get to the last step. In particular, what to do after the Symantec Analysis step in the parser. My initial thought was to use “Actions as Meaning” similar to Terry Winograd’s thoughts with his SHRDLU system. Using his own words:

“One of the basic viewpoints underlying the model is that all language use can be thought of as a way of activating procedures within the hearer. We can think of an utterance as a program – one that indirectly causes a set of operations to be carried out within the hearer’s cognitive system.”

What does this mean? It means the knowledge representation system needs to be able to run functions (actions) and maintain state. Take for instance, commands generated using SHRDLU:

Person: Pick up a big red block.

Computer: OK.

Person: Grasp the pyramid.

Computer: I don’t understand which pyramid you mean.

Person (changing their mind): Find a block which is taller than the one you are holding and put it into the box.

Computer: By “it”, I assume you mean the block which is taller than the one I am holding.

Computer: OK.

Person: What does the box contain?

Computer: The blue pyramid and the blue block.

Such systems contain a model of the current state of their domain. But what does that mean for Strong A.I. where the domain is limitless. Does that mean, the A.I. engine must model the entire universe from past to present to many possible futures?

Similar to the SHRDLU commands above, consider the following:

“Bob went to the store.”

and

“Bob goes to the store each week.”

In the first sentence, using “Action as Meaning” we would model the movement of Bob and change his location to the store. And the second, would setup a recurring event of Bob’s adventures to the store. To understand the meaning of English, does this level of detail need to be remembered and “acted” upon in the knowledge system?

My initial thought is yes, it does. After all, that’s what we do as Humans. Amazingly, we all keep these kind of mental models around in our head. Maybe the true genius is in how to create the model in such a way, that the model itself doesn’t take up the same amount of physical matter as what we are modeling? At what level do you model? If we could, would you model all of Bob’s atoms and their interactions as he moves to the store. Clearly, our brains don’t have this information, so it must not be needed to build an artificial brain. However, there would many fields of study that would appreciate this level of atomic modeling where our brains are used to think about such problems as in Microbiology.

And maybe this is why no-one has built an artificial brain yet. To do so, means to build a flexible enough model to model the universe. To build models of the infinitely small and large and common sense enough to abstract from one to the other.